Neural Network

The aim of this project is to study neural networks.

I used Marc Parizeau’s course on neural networks in 2004, professor at Laval University, Canada.

I was interested in the basics of neural networks to understand how they work rather than learning to use modern libraries.

This course requires notions of linear algebra and analysis.(What I had to acquire, especially using the 3blue1Brown and Khan academy chain)

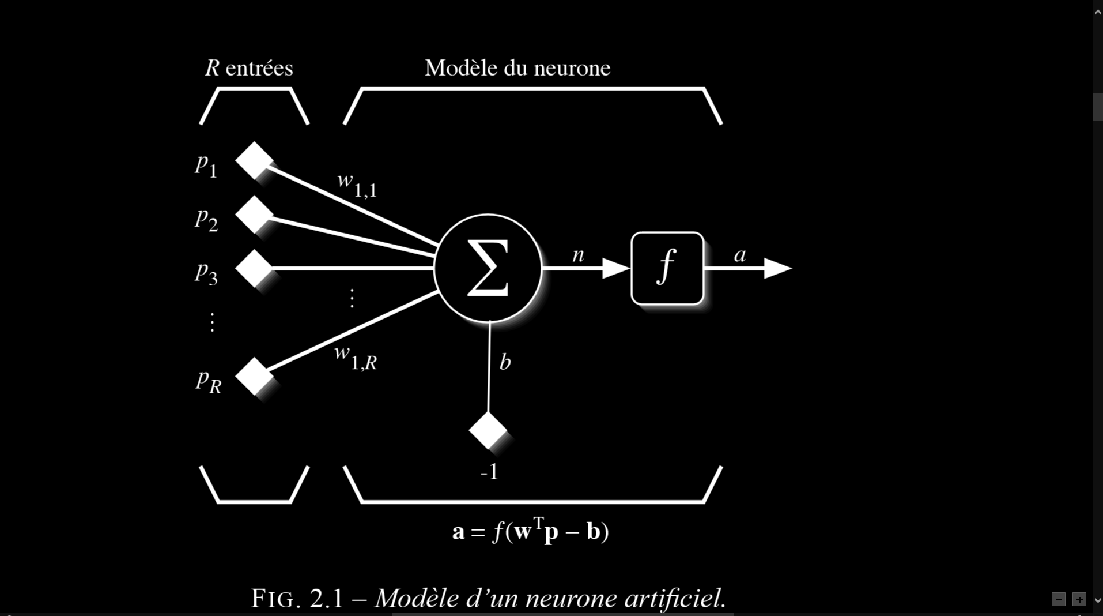

This is a neuron.

It operates the weighted sum of all its entries:

It adds up the inputs weighted by a specific weight to the input and subtracts the bias or activation threshold before returning the calculated value.

We can use a neuron to solve a problem:

We input the data of the problem and it gives us the solution of the problem.

Changing the weights of a neuron changes the final response.

Here is a simplified program that uses this technique:

The position of a point is entered and the neural networks try to guess whether it is magenta or cyan.

For each example given to the neuron, its weights are modified to modulate the response.

The neuron will output 1 or 0 depending on whether it considers the point to be cyan or magenta.

Here the response of the neural networks is represented by the line drawn on the screen that allows to separate points in two categories.

Each time the neuron is given a new point, it learns to recognize better if the point is cyan or magenta.

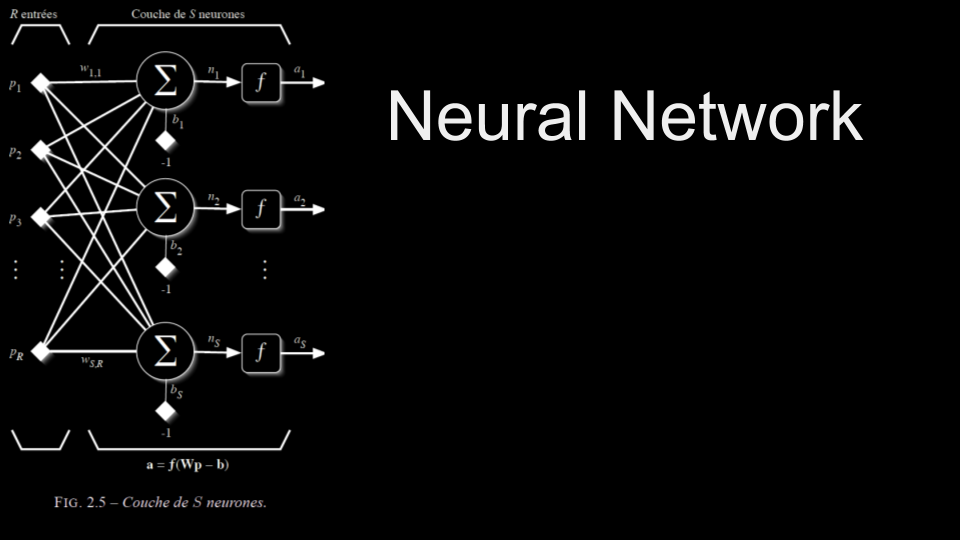

You can also have a neural network to solve a problem.

We could then use the outputs of each neuron to find out the solution to a problem.

However, neural networks using this technique are limited and can only solve linearly separable problems.

With a complex point cloud that cannot be separated by a line, the network cannot find a solution that is always correct.

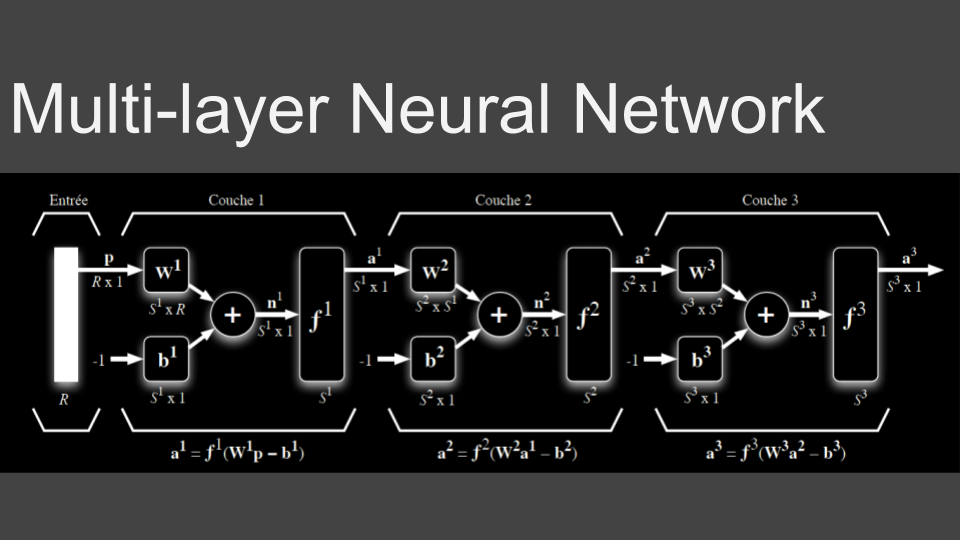

You can then use neural networks with multiple layers.

It is then necessary to find algorithms that allow the network to modulate its weights to find the right answer.

This corresponds to the learning system.

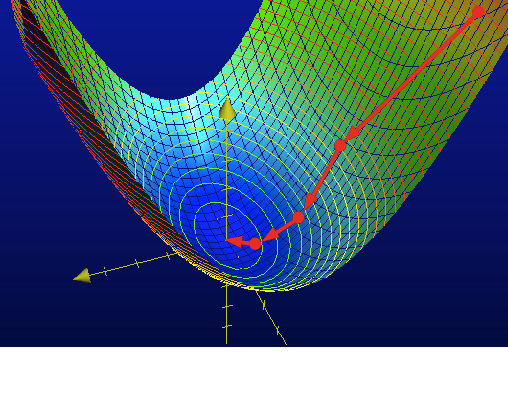

The technique I studied is called gradient descent:

It is about learning from a set of examples.

The error made by the network can be calculated. This is the difference between the output of the network and the desired output according to the example.

A neural network is only a set of simple operations that can be summarized by a large function.

We can then calculate the function that has for input the weights and for output the error made by the neural network in a specific case.

By taking the derivative of this function we can know how to modify weights to reduce the error.

Based on a large number of examples the neuron network can then learn.

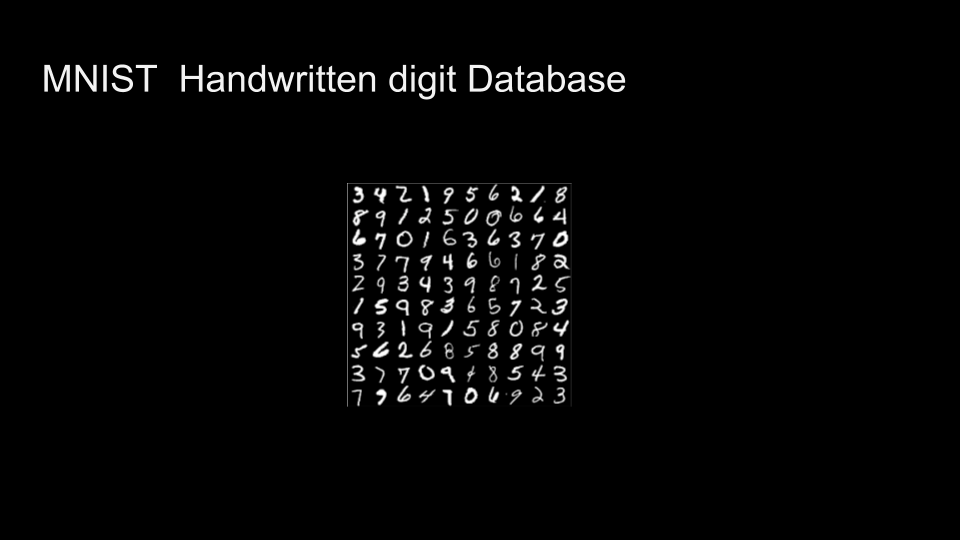

One example of learning is the recognition of handwritten numbers.

To implement this algorithm I used Michael Nielsen’s book and code.

http://neuralnetworksanddeeplearning.com/

It is a python implementation that uses MNIST, a database of handwritten numbers to guess which number it is.

Once the learning finishes the success rate is about 94%.

We can retrieve the images on which the network was mistaken. We can see that mistakes sometimes seem stupid to us, or on the contrary, the number seemed very difficult to recognize.

The way the network recognizes a number is quite different from ours.

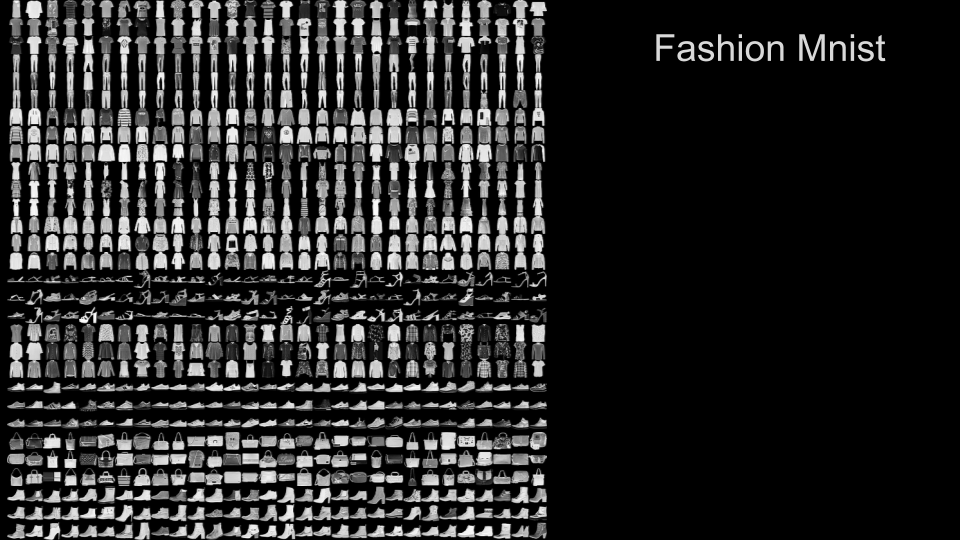

We can also adapt the code to process other image database like Fashion Mnist which has as its goal the recognition of shoe, shirt…

This problem is much more difficult. success rates drop on average to 84%.

This, however, is slightly higher than the average of errors made by humans, which is about 83.5%.

##Sources :

Marc Parizeau :

http://wcours.gel.ulaval.ca/2014/h/GIF4101/default/7references/reseauxdeneurones.pdf

http://neuralnetworksanddeeplearning.com/

https://www.youtube.com/watch?v=aircAruvnKk